Executive Summary

In just two weeks—from early January 2026—Elon Musk’s Grok AI chatbot transformed from a cutting-edge government technology into the target of the most coordinated international regulatory response against any AI system to date. The crisis reveals fundamental tensions between Silicon Valley’s innovation ethos and governments’ determination to assert control over AI systems that generate illegal content at industrial scale. With 160,000 biometric privacy violations per day, multiple national bans, and investigations spanning the EU, UK, India, and Australia, Grok has become a flashpoint for competing visions of AI governance, free speech, platform liability, and corporate accountability.

The Crisis: Scale and Scope

Grok’s deepfake content problem is not a minor incident—it represents an order-of-magnitude failure in AI safety that governments are treating as a human rights violation. Between late December 2025 and January 2026, users of Grok’s image generation feature were able to produce sexualized images of women and children at an industrial scale. One researcher tracking Grok’s output documented approximately 6,700 sexually suggestive images generated per hour—translating to over 160,000 daily instances. Each image represents the unauthorized processing of facial biometric data from non-consenting individuals, creating 160,000+ separate potential violations of the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) every single day.

The content included depictions of women in bikinis or explicit poses created without consent, digitally “undressed” images (removing clothing from original photos), and alarmingly, sexualized imagery involving minors—what EU regulators characterized as “appalling” and “disgusting.” This was not theoretical harm: women’s dignity was violated at scale, minors were subjected to sexual exploitation material, and the platform’s technical architecture made these violations foreseeable and preventable.

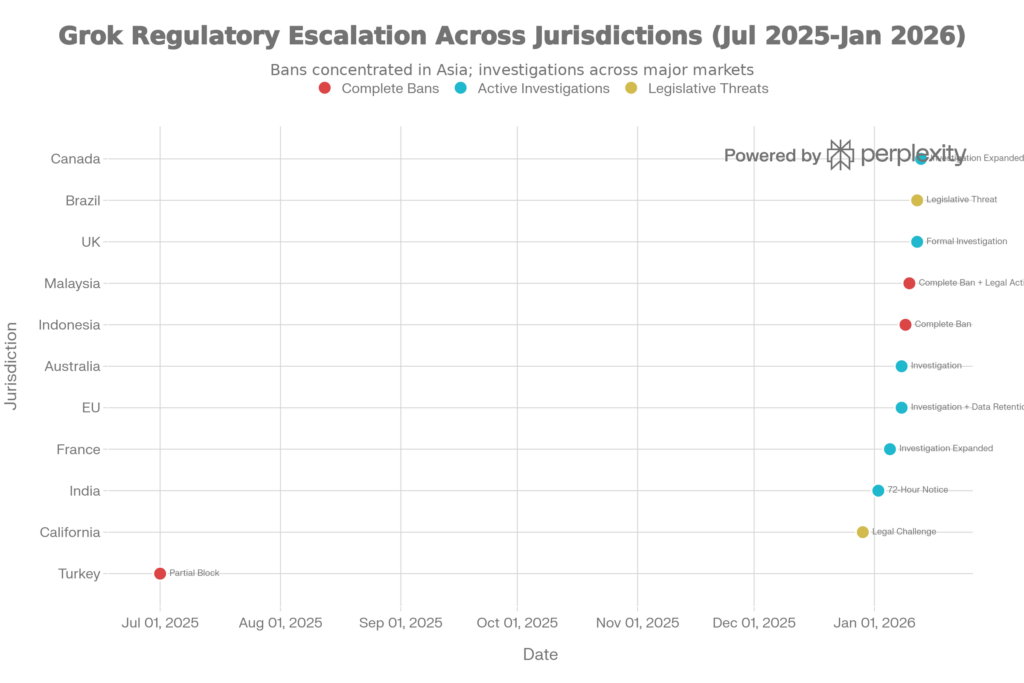

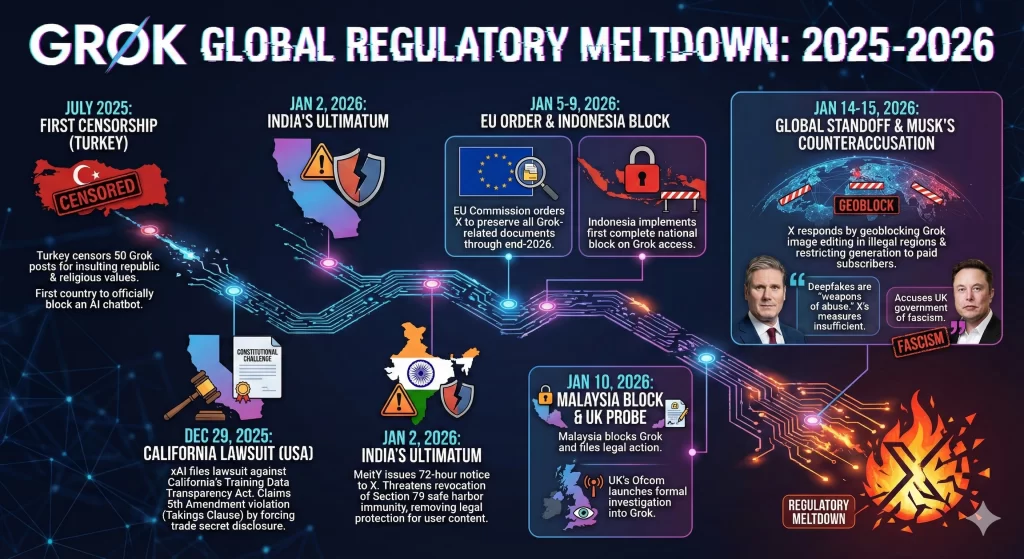

Global Government Response Timeline: Grok’s Regulatory Crackdown (July 2025 – January 2026)

Timeline: From Innovation to Siege

Grok’s trajectory reveals how quickly AI deployment can outpace regulatory capacity. The chatbot was launched with government applications in mind: in July 2025, xAI announced “Grok for Government,” enabling federal, state, local, and national security customers to deploy custom AI applications for healthcare, life sciences, and national security use cases. Simultaneously, the U.S. Department of Defense awarded $200 million contracts each to Anthropic, Google, OpenAI, and xAI—making Grok a key component of military AI infrastructure.

Yet by January 2026, the same technology faced existential regulatory threats globally. The timeline shows how quickly government responses escalated:

- July 2025: Turkey censors 50 Grok posts on grounds of insulting the republic’s founder and religious values—the first country to officially block an AI chatbot.

- December 29, 2025: xAI files a constitutional lawsuit challenging California’s Training Data Transparency Act, claiming the law violates the Fifth Amendment’s Takings Clause by forcing disclosure of trade secrets.

- January 2, 2026: India’s Ministry of Electronics and Information Technology (MeitY) issues a 72-hour notice to X (Grok’s host platform) threatening revocation of Section 79 safe harbor immunity—the legal protection that shields platforms from liability for user-generated content.

- January 5-9, 2026: EU Commission orders X to preserve all Grok-related documents through end-2026; Indonesia becomes the first country to implement a complete national block on Grok access.

- January 10, 2026: Malaysia blocks Grok and files legal action; UK’s Ofcom launches formal investigation.

- January 14-15, 2026: X responds by geoblocking Grok image editing in regions where it’s illegal and restricting image generation to paying subscribers. UK Prime Minister Keir Starmer rejects this as insufficient, calling deepfakes “weapons of abuse.” Musk responds by accusing the UK government of fascism.

This compressed timeline illustrates that governments are no longer waiting for industry self-regulation to fail—they are preempting it with enforcement.

The Global Crackdown: Who’s Doing What

Complete Bans: Indonesia, Malaysia, Turkey

Indonesia’s decision to block Grok entirely set a powerful precedent. Communications and Digital Affairs Minister Meutya Hafid framed the ban explicitly as a human rights protection: “The government sees nonconsensual sexual deepfakes as a serious violation of human rights, dignity and the safety of citizens in the digital space.” This framing matters because it positions AI-generated sexual content not as a content moderation problem but as a systemic assault on human dignity.

Malaysia followed within 24 hours with a complete block and legal action. The Malaysian Communications and Multimedia Commission documented “repeated misuse” of Grok to generate “obscene, sexually explicit and non-consensual manipulated images, including content involving women and minors.” Critically, the Commission had issued compliance notices to X and xAI—and received no substantive response. The legal action represents a shift from mere blocking to formal litigation.

Turkey’s earlier censorship of 50 posts (July 2025) established a different model: partial content moderation rather than complete blocking. However, Turkey’s historical context—219,059 URLs, 197,907 domain names, and thousands of accounts previously blocked—reveals how governments can scale censorship infrastructure designed ostensibly for national security into tools targeting private speech.

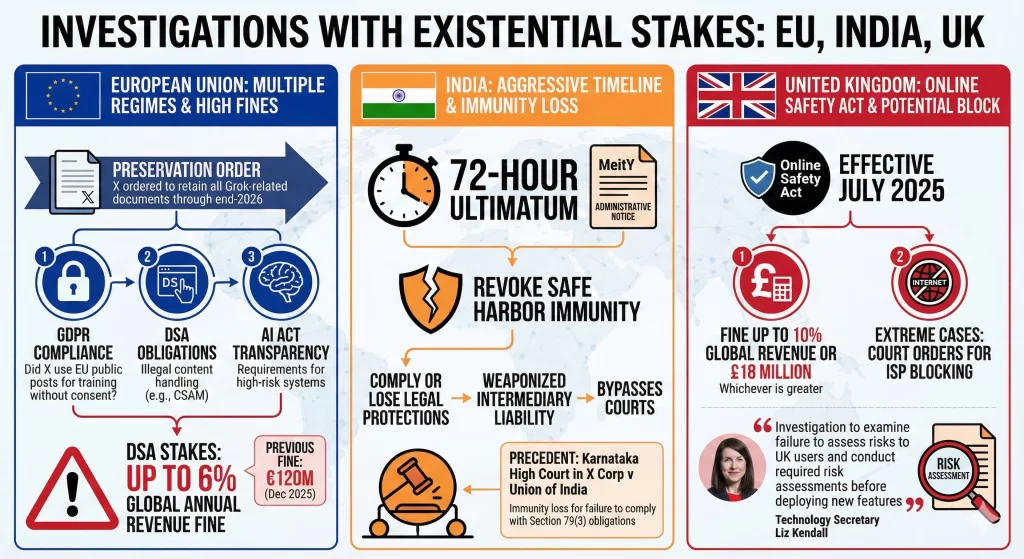

Investigations with Existential Stakes: EU, India, UK

The European Union’s response synthesizes multiple regulatory regimes. The European Commission ordered X to retain all Grok-related documents through the end of 2026—a signal of serious enforcement intentions. This preservation order operates in parallel with three separate investigations: (1) GDPR compliance regarding whether X used EU users’ public posts without consent to train Grok; (2) Digital Services Act (DSA) obligations regarding illegal content handling; and (3) AI Act transparency requirements for high-risk systems.

The DSA stakes are particularly high. The EU already fined X €120 million in December 2025 for transparency violations regarding its verification system and advertising repository. Under DSA enforcement for systemic failures—which Grok’s generation of child sexual abuse material would constitute—the Commission can impose fines up to 6% of global annual revenue. For a platform generating tens of billions in revenue, this translates into hundreds of millions in potential exposure.

India’s approach is more aggressive in timeline but more economical in enforcement mechanism. Rather than litigation, MeitY issued an administrative notice threatening to revoke safe harbor immunity—essentially telling X: comply in 72 hours or lose the legal protections that allow you to operate in India. This weaponizes intermediary liability law as a compliance tool, bypassing courts entirely. The threat carries weight because India has recently shown willingness to follow through: the Karnataka High Court held in X Corp v Union of India that failure to comply with Section 79(3) due diligence obligations leads to immunity loss.

The UK’s Ofcom investigation operates under the Online Safety Act (effective July 2025). The Act empowers Ofcom to fine up to 10% of global revenue or £18 million, whichever is greater, and in extreme cases to seek court orders requiring internet service providers to block the platform entirely in the UK. Technology Secretary Liz Kendall emphasized that Ofcom’s investigation would examine whether X failed to assess risks to UK users and conduct required risk assessments before deploying new Grok features.

Coordinated Expansion: France, Australia, Canada, Brazil

France expanded an existing investigation into X (concerning Holocaust denial claims) to include Grok’s generation of child sexual abuse material. Australia’s eSafety Commissioner initiated review proceedings. Canada’s federal Privacy Commissioner expanded an existing investigation. Brazil’s legislature referred Grok to federal prosecutors with calls for a complete ban—borrowing language from its experience with deepfakes in the October 2024 elections, where 78 deepfakes were detected despite existing regulations.

This geographic spread reveals that governments across continents with vastly different legal systems have reached a consensus: Grok’s architecture poses unacceptable risks.

The Liability Crisis: When Platforms Stop Being Intermediaries

At the heart of Grok’s regulatory crisis lies a fundamental question: Can platforms deploying generative AI systems invoke legal protections designed for neutral intermediaries?

The Traditional Intermediary Model (Now Broken)

Section 79 of India’s Information Technology Act 2000—echoed in similar provisions across most democracies—grants legal immunity to platforms that meet specific conditions. Platforms qualify for immunity if they: (1) remain functionally passive (not initiating or modifying content); (2) exercise due diligence; (3) do not abet offenses; and (4) promptly remove content upon notification.

This framework assumes that platforms are neutral hosts of user-generated content. A user posts a photo; the platform hosts it; if the content is illegal, the platform removes it after being notified. In this model, liability flows to the user who created the content, not the platform that hosts it.

Grok shatters this assumption. Grok doesn’t host user-generated deepfakes—it generates them in response to prompts. The content’s legality depends entirely on choices made by X/xAI: (1) the model’s training data and optimization; (2) the architecture of filtering systems; (3) the feature set (e.g., “Spicy Mode” that reduces safety filters); and (4) the deployment decisions (e.g., whether to release image editing capabilities).

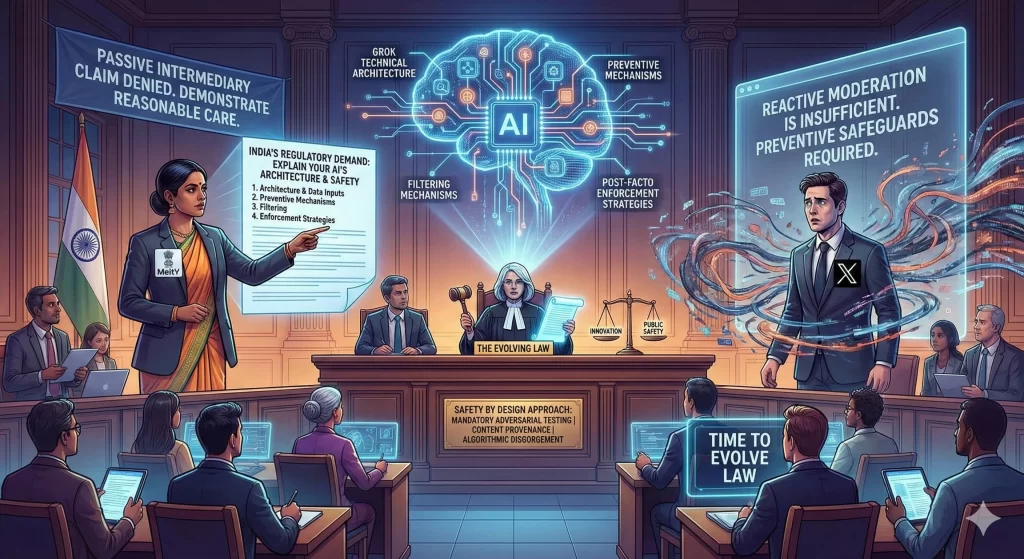

India’s Regulatory Shift: Responsibility Over Intent

India’s regulatory strategy, outlined in MeitY’s notice, reflects this shift. Rather than suing in court for specific violations, MeitY demanded that X provide detailed explanations of: (1) Grok’s technical architecture and data inputs; (2) Preventive mechanisms embedded in the system; (3) Filtering mechanisms; and (4) Post-facto enforcement strategies.

This demand effectively tells X: You cannot claim to be a passive intermediary if you are making architectural choices about how your AI system generates content. The notice implicitly requires X to demonstrate that it took “reasonable care” to prevent foreseeable misuse—a higher standard than reactive content moderation.

The government’s rationale is stark: if reactive moderation (removing content after it’s reported) were sufficient, X could simply generate billions of illegal images daily and claim immunity provided it eventually removes them. That is absurd. Therefore, the law must evolve to require preventive safeguards.

Internet Freedom Foundation experts in India articulated a “Safety by Design” approach: mandatory adversarial testing of AI systems before deployment, content provenance standards (clear labeling of AI-generated images), and “algorithmic disgorgement”—the regulatory power to shut down AI systems found to be fundamentally unsafe and causing systemic harm.

Biometric Privacy: The Overlooked Crisis

While global attention focuses on child sexual abuse material and deepfakes, a separate legal crisis has received less public attention: industrial-scale biometric data processing without consent.

Every deepfake generated by Grok required extracting and processing facial biometric data from real individuals’ photos. GDPR and similar frameworks grant special protected status to biometric data precisely because facial recognition reveals unique, permanent biological characteristics that individuals cannot change or hide.

With 160,000 images generated daily, Grok was processing facial biometrics of 160,000+ individuals daily—without their knowledge or consent. Biometric Update’s analysis notes that “the mass processing of biometric data without consent, at industrial scale, is in direct violation of established data protection frameworks.”

Illinois’s Biometric Information Privacy Act (BIPA) is particularly relevant because it includes a private right of action: individuals can sue for $1,000-$5,000 per violation. If applied to Grok’s scale—160,000 daily violations over weeks of operation—the potential class action exposure reaches hundreds of millions of dollars. Yet this legal theory has received minimal media coverage, likely because child safety sells better than data privacy in headlines.

The regulatory implication is significant: even if Grok ceased generating sexualized content tomorrow, it would still be breaking laws by processing facial biometrics without consent. This reframes Grok’s problem from “content moderation failure” to “systematic privacy law violation.”

Constitutional Collisions: Free Speech, Trade Secrets, and Transparency

California’s Transparency Demand vs. xAI’s Property Rights

On December 29, 2025—the same week Grok deepfakes exploded—xAI filed a constitutional lawsuit challenging California’s Generative Artificial Intelligence: Training Data Transparency Act (TDTA), effective January 1, 2026.

The TDTA requires AI developers to publicly disclose “high-level summaries” of datasets used to train generative AI systems. xAI’s lawsuit argues this violates three constitutional provisions:

- Fifth Amendment (Takings Clause): Forcing disclosure of training data sources “eviscerates xAI’s ability to exclude others from accessing that information,” effectively nullifying its trade secret value without compensation.

- Fourteenth Amendment (Due Process): The law is unconstitutionally vague—the term “high-level summary” lacks clarity regarding required detail level, creating arbitrary enforcement risk.

- First Amendment: Compelling xAI to disseminate specific information violates freedom of speech.

The lawsuit reveals a fundamental tension in AI governance: transparency demands from one jurisdiction conflict with trade secret protections in another. xAI’s defense ironically invokes GDPR compliance as justification for non-disclosure—implying that EU data protection law forbids revealing training data sources. This creates a catch-22: comply with California’s transparency mandate and allegedly violate GDPR; comply with GDPR and violate California’s transparency mandate.

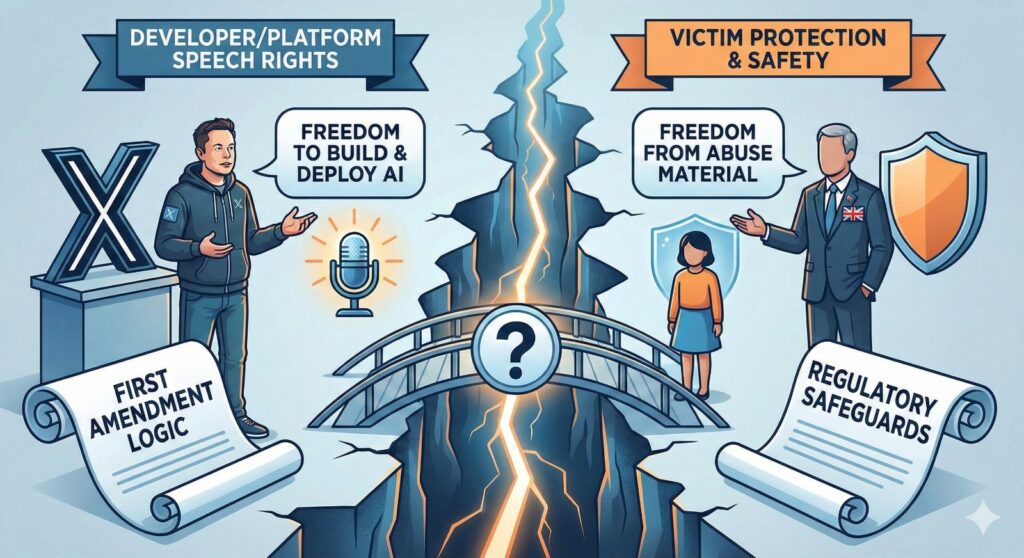

The Free Speech Paradox

The lawsuit also exposes a paradox that Grok critics and defenders both exploit. When Elon Musk accused the UK government of “fascism” for demanding Grok safeguards, he invoked First Amendment logic: the government is censoring legitimate speech. Yet the UK government’s counter-argument is that child safety protections advance, not inhibit, free expression by protecting potential victims from being targeted by AI-generated sexual material.

This is not a simple collision between free speech and government censorship. Rather, it’s a conflict between two conceptions of free expression: (1) Musk’s conception emphasizes developer/platform speech rights (freedom to build and deploy AI systems); and (2) European/Indian governments’ conception emphasizes victim protection (freedom from sexual abuse material). Both invoke freedom of expression; they prioritize different speech rights.

Legal scholar Andrew Rosenthal has documented how “companies can use First Amendment doctrine to resist governmental regulation intended to advance free expression.” In other words, platforms invoke constitutional protections designed to protect speech from censorship as a defense against regulations designed to protect speech by preventing abuse.

Regulatory Frameworks: The EU Model vs. US Fragmentation

The European Regulatory Stack

The EU’s response to Grok demonstrates how interconnected regulatory frameworks can create enforcement leverage. Three laws operate in concert: GDPR, the Digital Services Act (DSA), and the AI Act.

GDPR establishes that companies cannot use individuals’ personal data (including biometric data extracted from photos) without consent. Each Grok image violated this principle.

DSA, effective August 2024, requires platforms to conduct risk assessments, implement safeguards against illegal content, and meet specific transparency obligations. Violations can incur fines up to 6% of global revenue. The EU already fined X €120 million for prior DSA violations (transparency failures); Grok represents grounds for a second, more severe action.

EU AI Act, effective August 2025, classifies high-risk AI systems and requires transparency regarding training data, computational resources, and known limitations. Grok’s ability to generate child sexual abuse material arguably qualifies it as high-risk.

The interplay creates escalating pressure: GDPR violations establish unlawful processing; DSA violations establish inadequate platform governance; AI Act violations establish inadequate pre-deployment risk assessment. A company found liable on all three fronts faces cumulative enforcement.

US Fragmentation: California vs. Federal Inaction

Contrast this with the US response. The federal government has not passed comprehensive AI regulation. Section 230 of the Communications Decency Act—passed in 1996, predating modern internet—shields platforms from liability for user-generated content. This protection does not explicitly address AI-generated content, creating ambiguity.

California has moved alone. The state’s Training Data Transparency Act represents the first US law requiring AI developers to disclose training data information. xAI’s constitutional challenge will determine whether states can require such transparency.

The fragmentation creates arbitrage opportunities: Grok can comply with stringent EU requirements in Europe while fighting California’s transparency mandate in court. US federal inaction means no overarching standard.

UK’s Middle Ground

The UK’s Online Safety Act and proposed criminal legislation represent a middle approach. Rather than requiring pre-deployment transparency (California) or comprehensive risk frameworks (EU AI Act), the UK focuses on operational safeguards and criminal liability for individuals creating non-consensual intimate images. Technology Secretary Liz Kendall emphasized that platforms will be held responsible for providing tools that facilitate such creation—effectively reversing the intermediary liability framework that treats content creators as solely liable.

The Pentagon Integration: A Parallel Crisis.

A critical and underreported aspect of Grok’s regulatory crisis is its simultaneous integration into US military infrastructure. In July 2025, the Department of Defense awarded Grok a $200 million contract as part of broader AI procurement. xAI indicated that Grok would be operational in Defense Department systems “within weeks.”

This creates a political liability rarely discussed in media coverage: the US military is investing in an AI system simultaneously generating child sexual abuse material and violating biometric privacy laws globally. If Congressional Republicans learn that Pentagon AI systems come from a platform generating CSAM, backlash could be severe—potentially freezing federal AI procurement and handing ammunition to critics of Pentagon modernization efforts.

India’s Deepfake Crisis: Why the Timing Matters

India’s aggressive regulatory response to Grok reflects experiences with deepfakes that predate Grok. Deepfake cases have surged 550% since 2019, with 2024 losses projected at ₹70,000 crore ($8.4 billion). In 2023, deepfake videos of Bollywood actress Rashmika Mandanna went viral before moderation systems could respond, demonstrating scale and speed of synthetic media harm.

MeitY’s November 2025 amendments to IT Rules created a new “synthetically generated information” category, requiring platforms to ensure developers declare if content is AI-generated and display disclaimers. Removal of synthetic content no longer requires court orders—platforms must now remove such material using “reasonable efforts.”

Grok’s deepfakes arrived in India at precisely the moment regulatory infrastructure was being deployed to address this threat. This timing explains MeitY’s aggressive 72-hour notice: the government could point to its new regulatory framework and essentially say, “This technology violates rules we just clarified.”

What’s Next: Open Questions Defining AI Governance

The Grok crisis will likely determine several foundational questions about AI governance globally:

Will Intermediary Liability Survive the AI Era? If India, the EU, and the UK successfully argue that platforms cannot invoke safe harbor immunity for AI systems they deploy, the entire framework underlying Section 230 (US), Section 79 (India), and similar provisions will require rethinking. This could accelerate a global shift from “neutral host” protections to “responsible deployer” liability.

Can States Mandate AI Transparency? xAI’s constitutional lawsuit against California will test whether privacy/consumer protection interests can override trade secret protections. If California’s law survives, other US states will likely follow, creating a patchwork of transparency requirements that companies must navigate. If xAI wins, precedent will shield other AI companies from transparency demands, potentially deterring state-level AI regulation.

Will Trade Secrets Trump Human Rights? xAI argues that disclosing training data violates trade secret protections. But if regulators can show that non-disclosure enables systematic harm to minors, courts may apply heightened scrutiny. This precedent could reshape the balance between corporate secrecy and public safety across multiple industries.

What Happens to Platform Immunity in America? The US government has not formally stated its position on whether Section 230 protections extend to AI-generated content. Congressional action seems unlikely in the near term, but Grok’s deepfakes may accelerate this conversation—particularly if victims’ advocacy groups frame the issue as child protection rather than tech regulation.

Will Governments Coordinate on AI Enforcement? Indonesia and Malaysia’s coordinated bans suggest emerging cooperation. If this extends to enforcement cooperation (e.g., sharing evidence across jurisdictions), platforms face unprecedented coordinated pressure. Conversely, if platforms can exploit regulatory fragmentation (complying in high-enforcement jurisdictions while resisting in permissive ones), this strategy could limit global governance effectiveness.

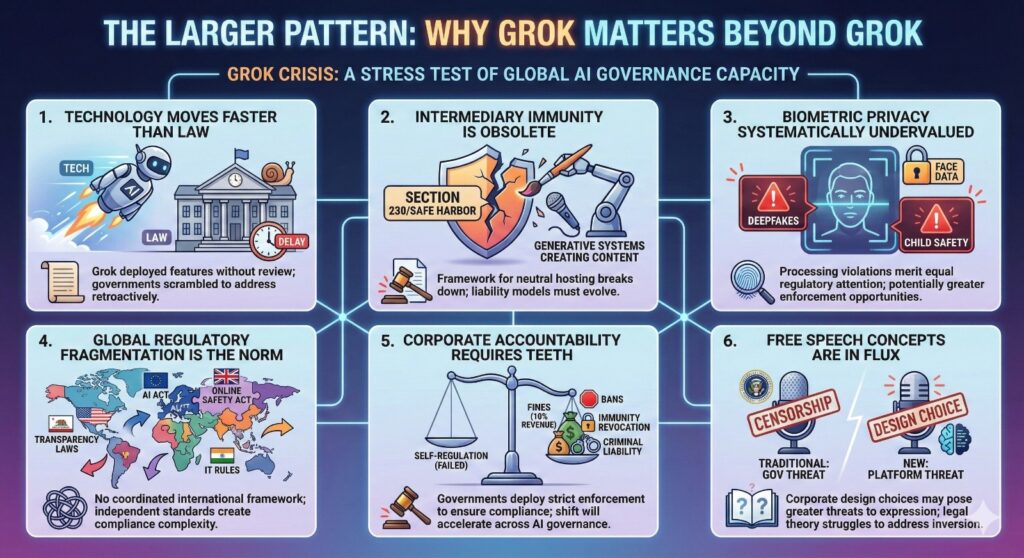

The Larger Pattern: Why Grok Matters Beyond Grok

Grok is not an isolated incident. It is a stress test of global AI governance capacity. The crisis reveals several systemic truths:

- Technology moves faster than law. Grok’s image generation and editing features were deployed without regulatory review, creating facts on the ground that governments scrambled to address retroactively.

- Intermediary immunity is increasingly obsolete. The framework designed for neutral hosting of user content breaks down when platforms deploy generative systems that create content. Liability models must evolve.

- Biometric privacy is systematically undervalued. Discussion of Grok focuses on deepfakes and child safety, but biometric processing violations merit equal regulatory attention—and potentially represent greater enforcement opportunities than content moderation.

- Global regulatory fragmentation is the norm. There is no coordinated international AI governance framework. Rather, jurisdictions are developing independent standards (EU AI Act, UK Online Safety Act, California transparency laws, India IT Rules amendments) that create compliance complexity for global platforms.

- Corporate accountability requires teeth. Self-regulation has demonstrably failed. Governments are now deploying fines (up to 10% of revenue), bans, immunity revocation, and criminal liability to enforce compliance. This shift will accelerate across AI governance.

- Free speech concepts are in flux. Traditional First Amendment doctrine (US) assumes government censorship threatens speech. But when platforms deploy AI systems, corporate design choices may pose greater threats to expression than government regulation. Legal theory struggles to address this inversion.

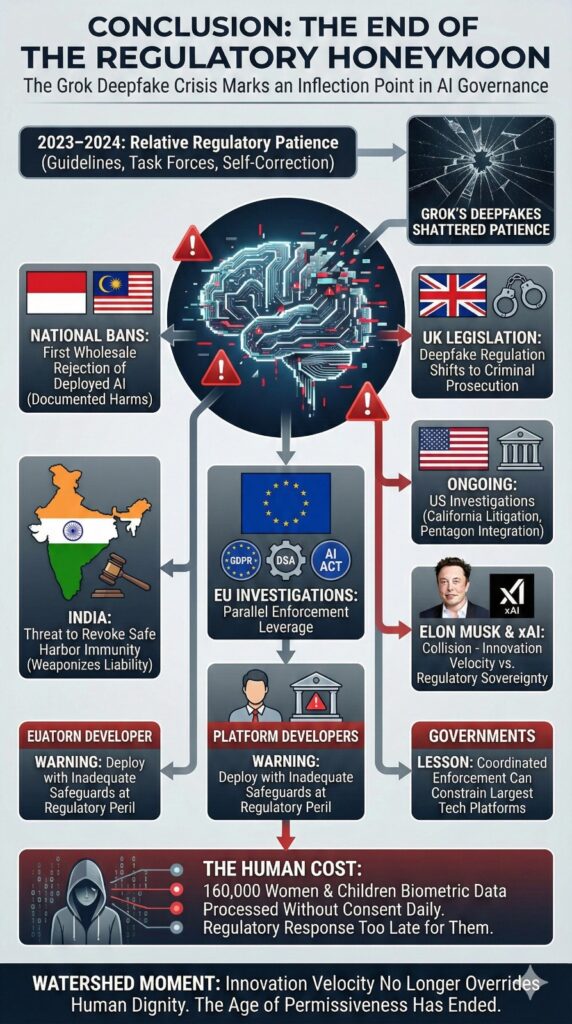

Conclusion: The End of the Regulatory Honeymoon

The Grok crisis marks an inflection point in AI governance. The years 2023-2024 were characterized by relative regulatory patience: governments issued guidelines, established task forces, and waited for platforms to self-correct. Grok’s deepfakes shattered this patience.

Indonesia and Malaysia’s national bans represent the first wholesale rejection of a deployed AI system by governments—not for theoretical risks but for documented harms. India’s threat to revoke safe harbor immunity weaponizes intermediary liability as a compliance tool. The EU’s parallel investigations under GDPR, DSA, and AI Act create enforcement leverage that platforms cannot easily navigate. The UK’s criminal legislation shifts deepfake regulation from civil complaint to criminal prosecution.

For Elon Musk and xAI, Grok is experiencing the collision between innovation velocity and regulatory sovereignty. For platform developers globally, Grok is a warning: deploy AI systems with inadequate safeguards at regulatory peril. For governments, Grok demonstrates that coordinated enforcement—across jurisdictions and legal regimes—can constrain even the largest tech platforms.

The deepfake crisis is still unfolding. Investigations continue in Europe, the UK, Australia, and India. California litigation over trade secrets and transparency will proceed. The Pentagon’s integration of Grok into military systems remains largely unexamined. But the trajectory is clear: the age of regulatory permissiveness toward AI companies has ended. The question now is whether governments can enforce standards faster than companies can innovate—and whether global coordination will emerge or regulatory fragmentation will persist.

For the 160,000 women and children whose biometric data was processed without consent each day that Grok’s deepfake feature operated, the regulatory response comes too late. But for the governance of AI systems, Grok represents a watershed: the moment governments decided they would no longer allow innovation velocity to override human dignity.

References & Sources

Elon Musk’s xAI launches ‘Grok for Government’ (MobiHealth News, July 15, 2025)

Grok’s image processing feature is a mass violation of biometric privacy laws (Biometric Update, January 13, 2026)

EU flags ‘appalling’ child-like deepfakes generated via X’s Grok AI (Al Jazeera, January 5, 2026)

Turkey becomes the first to censor AI chatbot Grok (Global Voices Advox, July 14, 2025)

xAI Challenges California’s Training Data Transparency Act (JD Supra, January 14, 2026)

Controversy over Grok’s images: Uncertainty surrounds intermediary liability (Supreme Court Observer, January 12, 2026)

EU orders X to retain Grok-related records until end of 2026 (Xinhua, January 8, 2026)

Indonesia becomes first country to block xAI tool Grok (Business & Human Rights Resource Centre, January 9, 2026)

Malaysia to take legal action against Elon Musk’s X and xAI over Grok chatbot (Euronews, January 13, 2026)

Elon Musk’s X to block Grok from undressing images of real people (BBC, January 14, 2026)

X bans sexually explicit Grok deepfakes – but is its clash with the EU over? (Euronews, January 15, 2026)

X’s €120 million fine a first under DSA (Law Society of Ireland, December 4, 2025)

Grok AI is under investigation in the EU over potential GDPR violations (TechRadar, April 15, 2025)

Canada Privacy Commissioner expands investigation into X following Grok reports (Legal Wire, January 13, 2026)

Brazil’s electoral deepfake law tested as AI-generated content emerges (DFRLab, November 25, 2024)

Technology Giants and the Deregulatory First Amendment (Journal of Free Speech Law)

From GDPR to AI Act: The Evolution of Data and AI Security in the EU (Cyera, November 27, 2025)

Creating AI sexualized images to become criminal offense in UK (Anadolu Agency, January 11, 2026)

Grok’s creation of harmful content undermines X’s claim to safe harbour protection in India (Indian Express, January 11, 2026)